Chapter 5: Direct Learning and Human Potential

Adaptive Learning Applications

Predictive Learning Applications

Classical Conditioning of Emotions

John Watson and Rosalie Rayner (1920) famously (some would say infamously) applied classical conditioning procedures to establish a fear in a young child. Their demonstration was a reaction to an influential case study published by Sigmund Freud (1955, originally published in 1909) concerning a young boy called Little Hans. Freud, based upon correspondence with the 5-year old’s father, concluded that the child’s fear of horses was the result of the psychodynamic defense mechanism of projection. He thought horses, wearing black blinders and having black snouts, symbolically represented the father who wore black-rimmed glasses and had a mustache. Freud interpreted the fear as being the result of an unconscious Oedipal conflict despite the knowledge that Little Hans witnessed a violent accident involving a horse soon before the onset of the fear.

Watson and Rayner read this case history and considered it enormously speculative and unconvincing (Agras, 1985). They felt that Pavlov’s research in classical conditioning provided principles that could more plausibly account for the onset of Little Han’s fear. They set out to test his hypothesis with the 11-month old son of a wet nurse working in the hospital where Watson was conducting research with white rats. The boy is frequently facetiously referred to as Little Albert, after the subject of Freud’s case history.

Albert initially demonstrated no fear and actually approached the white rat. Watson had an interest in child development and eventually published a successful book on this subject (Watson, 1928). He knew that infants innately feared very few things. Among them were painful stimuli, a sudden loss of support, and a startling noise. Watson and Rayner struck a steel rod (the US) from behind Albert while he was with the rat (the CS) 7 times. This was sufficient to result in Albert’s crying and withdrawing (CRs) from the rat when it was subsequently presented. It was also shown that Albert’s fear generalized to other objects including a rabbit, a fur coat and a Santa Claus mask.

Video

Watch the following video to see Watson’s use of classical conditioning to establish fears with Little Albert.

Desensitization Procedures

Mary Jones, one of Watson’s students, had the opportunity to undo a young child’s extreme fear of rabbits (Jones, 1924). Peter was fed his favorite food while the rabbit was gradually brought closer and closer to him. Eventually, he was able to hold the rabbit on his lap and play with it. This was an example of the combined use of desensitization and counter-conditioning procedures. The gradual increase in the intensity of the feared stimulus constituted the desensitization component. This procedure was designed to extinguish fear by permitting it to occur in mild form with no distressing following event. Feeding the child in the presence of the feared stimulus constituted counter-conditioning of the fear response by pairing the rabbit with a powerful appetitive stimulus that should elicit a competing response to fear. Direct in vivo (i.e., in the actual situation) desensitization is a very effective technique for addressing anxiety disorders, including shyness and social phobias (Donohue, Van Hasselt, & Hersen, 1994), public speaking anxiety (Newman, Hofmann, Trabeert, Roth, & Taylor, 1994), and even panic attacks (Clum, Clum, & Surls, 1993).

Sometimes, direct in vivo treatment of an anxiety disorder is either not possible (e.g., fear of extremely rare or dangerous events) or inconvenient (e.g., the fear occurs in difficult-to-reach or under difficult-to-control circumstances). In such instances it is possible to administer systematic desensitization (Wolpe, 1958) where the person imagines the fearful event under controlled conditions (e.g., the therapist’s office). Usually the person is taught to relax as a competing response while progressing through a hierarchy (i.e., ordered list) of realistic situations. Sometimes there is an underlying dimension that can serve as the basis for the hierarchy. Jones used distance from the rabbit when working with Peter. One could use steps on a ladder to treat a fear of height. Time from an aversive event can sometimes be used to structure the hierarchy. For example, someone who is afraid of flying might be asked to relax while thinking of planning a vacation for the following year involving flight, followed by ordering tickets 6 months in advance, picking out clothes, packing for the trip, etc. Imaginal systematic desensitization has been found effective for a variety of problems including severe test anxiety (Wolpe & Lazarus, 1966), fear of humiliating jealousy (Ventis, 1973), and anger management (Smith 1973, 577-578). A review of the systematic desensitization literature concluded that “for the first time in the history of psychological treatments, a specific treatment reliably produced measurable benefits for clients across a wide range of distressing problems in which anxiety was of fundamental importance” (Paul, 1969).

Video

Watch the following video for a demonstration of the systematic desensitization procedure:

https://www.youtube.com/watch?v=9nMNDeQwi8A

In recent years, virtual reality technology (see Figure 5.15) has been used to treat fears of height (Coelho, Waters, Hine, & Wallis, 2009) and flying (Wiederhold, Gevirtz, & Spira, 2001), in addition to other fears (Gorrindo, & James, 2009, Wiederhold, & Wiederhold, 2005). In one case study (Tworus, Szymanska, & Llnicki, 2010), a soldier wounded three times in battle was successfully treated for post traumatic stress disorder (PTSD) . The use of virtual reality is especially valuable in such instances where people have difficulty visualizing scenes and using imaginal desensitization techniques. Later, when we review control learning applications, we will again see how developing technologies enable and enhance treatment of difficult personal and social problems.

Video

Watch the following video for a demonstration of the the systematic desensitization procedure using virtual reality:

Exercise

Classical Conditioning of Word Meaning

Sticks and stones may break your bones

But words will never hurt you

We all understand that in a sense this old saying is true. We also recognize that words can inflict pain greater than that inflicted by sticks and stones. How do words acquire such power? Pavlov (1928) believed that words, through classical conditioning, acquired the capacity to serve as an indirect “ second signal system ” distinct from direct experience.

Ostensibly, the meaning of many words is established by pairing them with different experiences. That is, the meaning of a word consists of the learned responses to it (most of which cannot be observed) resulting from the context in which the word is learned. For example, if you close your eyes and think of an orange, you can probably “see”, “smell”, and “taste” the imagined orange. Novelists and poets are experts at using words to produce such rich imagery (DeGrandpre, 2000).

There are different types of evidence supporting the classical conditioning model of word meaning. Razran (1939), a bilingual Russian-American, translated and summarized early research from Russian laboratories. He coined the term “semantic generalization” to describe a different type of stimulus generalization than that described above. In one study it was shown that a conditioned response established to a blue light occurred to the word “blue” and vice versa. In these instances, generalization was based on similarity in meaning rather than similarity on a physical dimension. It has also been demonstrated that responses acquired to a word occurred to synonyms (words having the same meaning) but not homophones (words sounding the same), another example of semantic generalization (Foley & Cofer, 1943).

In a series of studies, Arthur and Carolyn Staats and their colleagues experimentally established meaning using classical conditioning procedures (Staats & Staats, 1957, 1959; Staats, Staats, & Crawford, 1962; Staats, Staats, & Heard, 1959, 1961; Staats, Staats, Heard, & Nims, 1959). In one study (Staats, Staats, & Crawford, 1962), subjects were shown a list of words several times, with the word “LARGE” being followed by either a loud sound or shock. This resulted in heightened galvanic skin responses (GSR) and higher ratings of unpleasantness to “LARGE.” It was also shown that the unpleasantness rating was related to the GSR magnitude. These studies provide compelling support for classical conditioning being a basic process for establishing word meaning. In Chapter 6, we will consider the importance of word meaning in our overall discussion of language as an indirect learning procedure.

Known words can be used to establish the meaning of new words through higher-order conditioning. An important example involves using established words to discourage undesirable acts, reducing the need to rely on punishment. Let us say a parent says “No!” before slapping a child on the wrist as the child starts to stick a finger in an electric outlet. The slap should cause the child to withdraw her/his hand. On a later occasion, the parent says “Hot, no!” as the child reaches for a pot on the stove. The initial pairing with a slap on the wrist would result in the word “no” being sufficient to cause the child to withdraw her/his hand before touching the stove. Saying “Hot, no!” could transfer the withdrawal response to the word “hot.” Here we see the power of classical conditioning principles in helping us understand language acquisition, including the use of words to establish meaning. We also see the power of language as a means of protecting a child from the “school of hard knocks” (and shocks, and burns, etc.).

Let us return to the example of a child about to stick her/his hand in an electric outlet. The parent might say “No!” and slap the child on the wrist, producing a withdrawal response. Now it would theoretically be possible to use the word “no” to attach meaning to another word through higher-order conditioning. For example, the child might be reaching for a pot on a stove and the parent could say “Hot, no!” The word “hot” should now elicit a withdrawal response despite never being paired with shock. This would be an example of what Pavlov meant by a second signal system with words substituting for direct experience.

Evaluative Conditioning

Evaluative conditioning is a term applied to the major research area examining how likes and dislikes are established by pairing objects with positive or negative stimuli in a classical conditioning paradigm (Walther, Weil, & Dusing, 2011). Jan De Houwer and his colleagues have summarized more than three decades of research documenting the effectiveness of such procedures in laboratory studies and in applications to social psychology and consumer science (De Houwer, 2007; De Houwer, Thomas, and Baeyens, 2001; Hofmann, De Houwer, Perugini, Baeyens, and Crombez, 2010). Recently, it has been demonstrated that pairing aversive health-related images with fattening foods resulted in their being considered more negatively. Subjects became more likely to choose healthful fruit rather than snack foods (Hollands, Prestwich, and Marteau, 2011). Similar findings were obtained with alcohol and drinking behavior (Houben, Havermans, and Wiers, 2010). A consumer science study demonstrated that college students preferred a pen previously paired with positive images when asked to make a selection (Dempsey, and Mitchell (2010). Celebrity endorsers have been shown to be very effective, particularly when there was an appropriate connection between the endorser and the product (Till, Stanley, and Priluck, 2008). It has even been shown that pairing the word “I” with positive trait words increased self-esteem. College students receiving this experience were not affected by negative feedback regarding their intelligence (Dijksterhuis, 2004). Sexual imagery has been used for years to promote products such as beer and cars (see Figure 5.16).

Video

Watch the following video for demonstrations of the use of evaluative conditioning in advertising:

Exercise

When we think of adaptive behavior, we ordinarily do not think of emotions or word meaning. Instead, we usually think of these as conditions that motivate or energize the individual to take action. We now turn our attention to control learning, the type of behavior we ordinarily have in mind when we think of adaptation.

Control Learning Applications

Maintenance of Control Learning

We observed that, even after learning has occurred, individuals remain sensitive to environmental correlations and contingencies. Previously-acquired behaviors will stop occurring if the correlation (in the case of predictive learning) or contingency (in the case of control learning) is eliminated. This is the extinction process. There is a need to understand the factors that maintain learned behavior when it is infrequently reinforced which is often the case under naturalistic conditions.

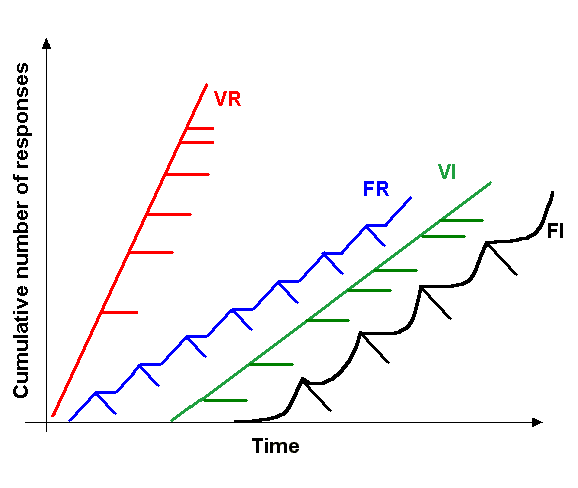

Much of B.F. Skinner’s empirical research demonstrated the effects of different intermittent schedules of reinforcement on the response patterns of pigeons and rats (c.f., Ferster & Skinner, 1957). There are an infinite number of possible intermittent schedules between the extremes of never reinforcing and always reinforcing responses. How can we organize the possibilities in a meaningful way? As he did with control learning contingencies, Skinner developed a useful schema for the categorization of intermittent schedules based on two considerations. The most fundamental distinction was between schedules requiring that a certain number of responses be completed (named ratio schedules) and those requiring only a single response when the opportunity was presented (named interval schedules). I often ask my classes if they can provide “real life” examples of ratio and interval schedules. One astute student preparing to enter the military suggested that officers try to get soldiers to believe that promotions occur according to ratio schedules (i.e., how often one does the right thing) but, in reality, they occur on the basis of interval schedules (i.e., doing the right thing when an officer happened to be observing). Working on a commission basis is a ratio contingency. The more items you sell, the more money you make. Calling one’s friend is an interval contingency. It does not matter how often you try if the person is not home. Only one call is necessary if the friend is available. The other distinction Skinner made is based on whether the response requirement (in ratio schedules) or time requirement (in interval schedules) is constant or not. Contingencies based on constants were called “fixed” and those where the requirements changed were called “variable.” These two distinctions define the four basic reinforcement schedules (see Figure 5.21): fixed ratio (FR), variable ratio (VR), fixed interval (FI), and variable interval (VI).

Contingency

Response Dependent Time Dependent

| FR | FI |

| VR | VI |

F = Fixed (constant pattern)

V = Variable (random pattern)

R = Ratio (number of responses)

I = Interval (time till opportunity)

Figure 5.10 Skinner’s Schema of Intermittent Schedules of Reinforcement.

In an FR schedule, reinforcement occurs after a constant number of responses. For example, an individual on an FR 20 schedule would be reinforced after every twentieth response. In comparison, an individual on a VR 20 schedule would be reinforced, on the average, every twentieth response (e.g., 7 times in 140 responses with no pattern). In a fixed interval schedule, the opportunity for reinforcement is available after the passage of a constant amount of time since the previous reinforced response. For example, in an FI 5- minute schedule the individual will be reinforced for the first response occurring after 5 minutes elapse since the previous reinforcement. A VI 5- minute schedule would include different interval lengths averaging 5 minutes between opportunities.

It is possible to describe the behavioral pattern emerging as a function of exposure to an intermittent schedule of reinforcement as an example of control learning. That is, one can consider what constitutes the most effective (i.e., adaptive) pattern of responding to the different contingencies. The most adaptive outcome would result in obtaining food as soon as possible while expending the least amount of effort. Individuals have a degree of control over the frequency and timing of reward in ratio schedules that they do not possess in interval schedules. For example, if a ratio value is 5 (i.e., FR 5) the quicker one responds the sooner the requirement is completed. If an interval value is 5 minutes it does not matter how rapidly or how often one responds during the interval. It must lapse before the next response is reinforced. In contrast, with interval schedules, only one response is required, so lower rates of responding will reduce effort while possibly delaying reinforcement.

Figure 5.11 Characteristic cumulative response patterns for the four basic schedules.

Figure 5.11 shows the characteristic cumulative response patterns produced by each of the four basic schedules. We may consider the optimal response pattern for each of the four basic schedules and compare this with what actually occurs under laboratory conditions. High rates of responding in ratio schedules will result in receiving food as soon as possible and maximizing the amount of food received per session. Therefore, it is not surprising that ratio schedules result in higher response rates than interval schedules. FR schedules result in an “all-or-none” pattern with distinct post-reinforcement pauses related to the ratio value. In contrast, shorter pauses typically occur randomly with large value VR schedules. This difference is due to the predictability of the FR schedule, whereby with experience the individual can develop an expectancy regarding the amount of effort and time it will take to be rewarded. The amount of effort and time required for the next reward is unpredictable with VR schedules; therefore, one does not observe post-reinforcement pauses.

With an FI schedule, it is possible to receive reinforcement as soon and with as little effort as possible by responding once immediately after the required interval has elapsed. For example, in an FI 5 min schedule, responses occurring before the 5 minutes lapse have no effect on delivery of food. If one waits past 5 minutes, reinforcement is delayed. Thus, there is competition between the desires to obtain food as soon as possible and to conserve responses. The cumulative response pattern that emerges under such schedules has been described as the fixed-interval scallop and is a compromise between these two competing motives. There is an extended pause after reinforcement similar to what occurs with the FR schedule since once again there is a predictive interval length between reinforcements . However, unlike the characteristic burst following the pause in FR responding, the FI schedule results in a gradual increase in response rate until responding is occurring consistently when the reward becomes available. This response pattern results in receiving the food as soon as possible and conserving responses. Repetition of the pattern produces the characteristic scalloped cumulative response graph.

With a VI schedule, it is impossible to predict when the next opportunity for reinforcement will occur. Under these conditions, as with the VR schedule, the individual responds at a constant rate. Unlike the VR schedule, the pace is dependent upon the average interval length. As with the FI schedule, the VI pattern represents a compromise between the desires to obtain the reward as soon as possible and to conserve responses. An example might help clarify why the VI schedule works this way. Imagine you have two tickets to a concert and know two friends who would love to go with you. One of them is much chattier than the other. Let us say the chatty friend talks an average of 30 minutes on the phone, while the other averages only about 5 minutes. Assuming you would like to contact one of your friends as soon as possible, you would most likely try the less chatty one first and more often if receiving busy signals for both.

Exercise

Applications of Control Learning

Learned Industriousness

The biographies of high-achieving individuals often describe them as enormously persistent. They seem to engage in a lot of practice in their area of specialization, be it the arts, sciences, helping professions, business, athletics, etc. Successful individuals persevere, even after many “failures” occur over extended time periods. Legend has it that when Thomas Edison’s wife asked him what he knew after all the time he had spent trying to determine an effective filament for a light bulb he replied “I’ve just found 10,000 ways that won’t work!” Eisenberger (1992) reviewed the animal and human research literatures and coined the term learned industriousness to apply to the combination of persistence, willingness to expend maximum effort, and self-control (e.g., willingness to postpone gratification in the marshmallow test described in Chapter 1). Eisenberger found that improving an individual on any one of these characteristics carried over to the other two. Intermittent schedule effects have implications regarding learned industriousness.

Contingency Management of Substance-Abuse

An adult selling illegal drugs to support an addictive disorder is highly motivated to surreptitiously commit an illegal act. As withdrawal symptoms become increasingly severe, punishment procedures lose their effectiveness. It would be prudent and desirable to enroll the addict in a drug-rehabilitation program involving medically-monitored withdrawal procedures or medical provision of a legal substitute. Ideally, this would be done on a voluntary basis but it could be court-mandated. Contingency management procedures have been used successfully for years to treat substance abusers. In one of the first such studies, vouchers exchangeable for goods and services were rewarded for cocaine-free urine samples, assessed 3 times per week (Higgins, Delaney, Budney, Bickel, Hughes, & Foerg, 1991). Hopefully, successful treatment of the addiction would be sufficient to eliminate the motive for further criminal activity. A contingency management cash-based voucher program for alcohol abstinence has been implemented using combined urine and breath assessment procedures. It resulted in a doubling, from 35% to 69%, of alcohol-free test results (McDonell, Howell, McPherson, Cameron, Srebnik, Roll, and Ries, 2012).

Video

Watch the following video for a demonstration of a contingency management program for substance abuse:

Exercise

Self-Control: Manipulating “A”s & “C”s to Affect “B”s

Self-control techniques have been described in previous chapters. Perri and Richards (1977) found that college students systematically using behavior-change techniques were more successful than others in regulating their eating, smoking, and studying habits. Findings such as these constitute the empirical basis for the self-control assignments in this book. In this chapter, we discussed the control learning ABCs. Now we will see how it is possible to manipulate the antecedents and consequences of one’s own behaviors in order to change in a desired way. We saw how prompting may be used to speed the acquisition process. A prompt is any stimulus that increases the likelihood of a behavior. People have been shopping from lists and pasting signs to their refrigerators for years. My students believe it rains “Post-it” notes in my office! Sometimes, it may be sufficient to address a behavioral deficit by placing prompts in appropriate locations. For example, you could put up signs or pictures as reminders to clean your room, organize clutter, converse with your children, exercise, etc. Place healthy foods in the front of the refrigerator and pantry so that you see them first. In the instance of behavioral excesses, your objective is to reduce or eliminate prompts (i.e., “triggers”) for your target behavior. Examples would include restricting eating to one location in your home, avoiding situations where you are likely to smoke, eat or drink to excess, etc. Reduce the effectiveness of powerful prompts by keeping them out of sight and/or creating delays in the amount of time required to consume them. For example, fattening foods could be kept in the back of the refrigerator wrapped in several bags.

Throughout this book we stress the human ability to transform the environment. You have the power to structure your surroundings to encourage desirable and discourage undesirable acts. Sometimes adding prompts and eliminating triggers is sufficient to achieve your personal objectives. If this is the case, it will become apparent as you graph your intervention phase data. If manipulating antecedents is insufficient, you can manipulate the consequences of your thoughts, feelings, or overt acts. If you are addressing a behavioral deficit (e.g., you would like to exercise, or study more), you need to identify a convenient and effective reinforcer (i.e., reward, or appetitive stimulus). Straightforward possibilities include money (a powerful generalized reinforcer that can be earned immediately) and favored activities that can be engaged in daily (e.g., pleasure reading, watching TV, listening to music, engaging in on-line activities, playing video games, texting, etc.). Maximize the likelihood of success by starting with minimal requirements and gradually increasing the performance levels required to earn rewards (i.e., use the shaping procedure). For example, you might start out by walking slowly on a treadmill for brief periods of time, gradually increasing the speed and duration of sessions.

After implementing self-control manipulations of antecedents and consequences, it is important to continue to accurately record the target behavior during the intervention phase. If the results are less than satisfactory, it should be determined whether there is an implementation problem (e.g., the reinforcer is too delayed or not powerful enough) or whether it is necessary to change the procedure. The current research literature is the best source for problem-solving strategies. The great majority of my students are quite successful in attaining their self-control project goals. Recently some have taken advantage of developing technologies in their projects. The smart phone is gradually becoming an all purpose “ABC” device. As antecedents, students are using “to do” lists and alarm settings as prompts. They use the note pad to record behavioral observations. Some applications on smart phones and fitness devices enable you to record monitor and record health habits such as the quality of your sleep and the number of calories you are consuming at meals. As a consequence (i.e., reinforcer), you could use access to games or listening to music, possibly on the phone itself.

At the end of Chapter 4, you were asked to create a pie chart consisting of slices representing the percentages of time you spend engaged in different activities (e.g., sleeping, eating, in class, completing homework assignments, working, commuting, and so on). One way of defining self-actualization is to consider how you would modify the slices of your pie (i.e., the number or durations of the different behaviors). An intervention plan manipulating antecedents and consequences could be developed to increase or decrease the amount of time spent in one or more of the activities. If you wished to increase the amount of time you spend on your schoolwork, you could control antecedents by always studying in a quiet private spot without distractions. You could reward yourself (e.g., with 15 minutes of “fun” time) each day you work ten percent more than your average baseline studying time (calculated over the two week period). For example, if you worked on the average an hour a day on your homework (i.e., seven hours per week over the two week period) you would need to work for 66 minutes to earn a daily reward. Once you have been successful in increasing the weekly average by ten percent (to eight hours and ten minutes for a week) you could increase the time required to earn a reward by an additional ten percent. In this way, you would be implementing the shaping procedure until you achieved your final study time objective.

Another way of defining self-actualization is to consider if there are behaviors you would like to increase (i.e., behavioral deficits such as not reading enough pages in your textbooks) or decrease (i.e., behavioral excesses such as number of times you check Facebook) as you strive to achieve your potential and achieve your short- and long-term goals. Many examples of student self-control projects were listed at the end of Chapter 1 along with examples of how they were assessed. The same type of shaping process described above for a duration measure could be applied to amount (e.g., pages completed) or frequency of a behavior. For example, if your baseline data indicated that you read an average of 20 pages per day, you could require completion of 22 pages to earn a daily reward. If you maintain this average for a week you could increase by an additional ten percent and so on. If you find you reach a plateau (i.e., a point at which you are no longer improving) you might keep the increase required at the same level (e.g., two pages) until achieving your goal. Best of luck in your efforts to apply the science of psychology to yourself!

Exercise

Attributions

Figure 5.11 “Cumulative records” by PNG crusade bot at English Wikipedia is in the Public Domain

procedure designed to reduce anxiety by teaching an individual to relax while being exposed to a hierarchy (ordered list) of anxiety-eliciting events

procedure designed to substitute a desirable for an undesirable behavior (for example substituting relaxation for anxiety)

person reports experiencing recurrent flashbacks of a traumatic event more than a month after it happened; person avoids talking about or approaching any reminder of the event

Pavlov's term for when words, through classical conditioning, acquired the capacity to influence behavior

use of classical conditioning procedures to establish likes and dislikes by pairing neutral objects with appetitive and aversive stimuli

reinforcement occurs after a constant number of responses

reinforcement occurs after an average number of responses

the opportunity for reinforcement is available after a constant amount of time since the previous reinforced response

the opportunity for reinforcement is available after an average amount of time since the previous reinforced response

the cumulative response pattern characteristic of the FI reinforcement schedule; there is an extended pause after reinforcement followed by a gradual increase in response rate until the reward becomes available again

the combination of persistence, willingness to expend maximum effort, and delay gratification